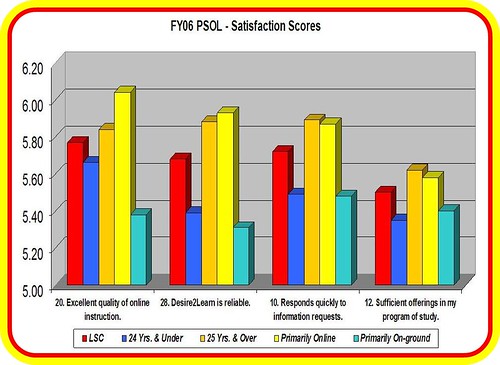

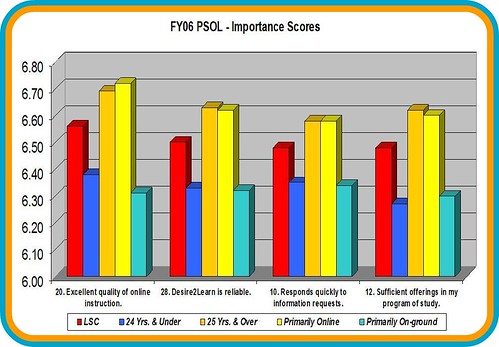

One of the important things to do with your PSOL data is to look at the gaps – the differences between the i mportance ratings and the satisfaction ratings expressed by the students. You’re always concerned about the larger gaps which indicate where you have significant room for improvement, if it is an item where you have much control over (or impact upon) the level of student satisfaction.

mportance ratings and the satisfaction ratings expressed by the students. You’re always concerned about the larger gaps which indicate where you have significant room for improvement, if it is an item where you have much control over (or impact upon) the level of student satisfaction.

At the same time, I like to look at the smaller gaps. You also need to have some positive reinforcement for those things that are going very well – for those things where you are essentially meeting the student expectations. The seven items listed below come from the 26 standard items on the PSOL and indicate the lowest performance gaps for Lake Superior College in the FY06 satisfaction survey. The item number is shown first, followed by the full text of the item, and then the size of the gap (importance score minus satisfaction score).

08. Student-to-student collaborations are valuable to me = (.42)

This one is always interesting (see previous post about this item) because the students are basically telling you something like this: “I really don’t care much about this, but you’re doing a pretty good job with it!” This item has a decent (not great) satisfaction rating, but it has an extremely low importance score.

01. This institution has a good reputation = .02

This item is interesting to me because it tells me that we are serving the proper audience. Our students know who we are and what we can do for them. For the national numbers, the importance score is much higher than ours, which is why the national gap was .39 while ours was only .02. The satisfaction scores were almost identical, but reputation is more important to more of the students in the national survey. Keep in mind that many of those students are graduate students and have a very different demographic than our students.

18. Registration for online courses is convenient = .14

We have a low gap here because our registration system is completely online and has been for several years. It is managed by the state system (MnSCU) and seems to meet students’ needs quite effectively. This is always one of the most important survey items for students, but one with consistently high satisfaction scores.

21. Adequate online library resources are provided = .33

For several years now we have had significant online library resources available to all students. Our biggest issue is in getting them to use the resources, not whether the resources are online or not.

24. Tutoring services are readily available for online courses = .33

We are currently in our fifth year of offering online tutoring services through SMARTHINKING. We have always scored highly in this category, but you also have to keep in mind that only 10-15% of the students use tutoring services (either online or on-ground) and so the importance score is one of the lowest on the survey.

23. Billing and payment procedures are convenient for me. = .35

Much like our online registration, the billing and payment function is managed centrally for all 32 institutions. This is an item with a high importance score, but also a high satisfaction score.

19. Online career services are available = .35

This last one also is not very important to many of our online students, since so many of our online students are transfer students working on an A.A. degree of the Minnesota Transfer Curriculum. Our gap is low because this is not a hot topic for most of our students.

For me, the takeaways here are as follows:

- Small performance gaps are often the result on items where the student ratings indicate a low importance score. That is the case for items 1, 8, 19, and 24 above. All four of those items have an importance score below 6.0 which generally indicates that most students rate them somewhere between “slightly important” and “important.”

- Small performance gaps on the other three items (18, 21, and 23) indicate areas where you are really meeting the needs of the students. These items have high importance scores (above 6.0) and high satisfaction scores. These are things to be proud of.

- Gap analysis is not an exact science. It is only a starting point in looking for places where you may be able to improve the services that are being offered to students.

(CC Flickr photo by Annie Mole)

mportance ratings and the satisfaction ratings expressed by the students. You’re always concerned about the larger gaps which indicate where you have significant room for improvement, if it is an item where you have much control over (or impact upon) the level of student satisfaction.

mportance ratings and the satisfaction ratings expressed by the students. You’re always concerned about the larger gaps which indicate where you have significant room for improvement, if it is an item where you have much control over (or impact upon) the level of student satisfaction.