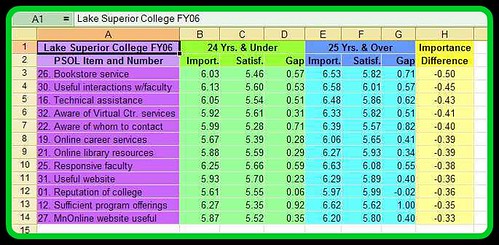

Click screenshots to enlarge. These two charts above show the survey results from 2004 from two different Noel-Levitz surveys at LSC. The N-L Student Satisfaction Inventory (SSI) is given to a sample of student taking traditional on-ground (or Face-to-Face or F2F) courses. This post deals with six questions that appear in essentially the same form on the two questions. I feel comfortable in comparing the results on those seven questions to guage the differences in relative importance and satisfaction when comparing the F2F students with the online students. There are an additional 6 or 7 questions that are reasonably comparable but I will hold those for a later post.

The importance scores for these six items provide some useful information. Three of the six items indicate much greater importance from online learners, and the other three show no significant difference in the level of importance. The three with a large difference in importance are:

SSI #18. The quality of instruction I receive in most of my classes is excellent. Importance = 6.35

PSOL #20. The quality of online instruction is excellent. Importance = 6.59

SSI #46. Faculty provide timely feedback about student progress in a course. Importance = 6.01

PSOL #04. Faculty provide timely feedback about student progress. Importance = 6.47

SSI #62. Bookstore staff are helpful. Importance = 5.75

PSOL #26. The bookstore provides timely service to students. Importance = 6.37

For those same three items, the satisfaction scores are as follows.

SSI #18. The quality of instruction I receive in most of my classes is excellent. Satisfaction = 5.43

PSOL #20. The quality of online instruction is excellent. Satisfaction = 5.84

Difference of .41 (huge) in favor of online.

SSI #46. Faculty provide timely feedback about student progress in a course. Satisfaction = 5.06

PSOL #04. Faculty provide timely feedback about student progress. Satisfaction = 5.80

Difference of .74 (massive) in favor of online.

SSI #62. Bookstore staff are helpful. Satisfaction = 5.10

PSOL #26. The bookstore provides timely service to students. Satisfaction = 5.28

Difference of .18 (significant) in favor of online (and this was before we had an online bookstore).

For those other three items, the importance was about the same. Satisfaction scores are as follows.

SSI #14. Library resources and services are adequate. Satisfaction = 5.45

PSOL #21. Adequate online library resources are provided. Satisfaction = 5.61

Difference of .16 which is somewhat significant, but not huge.

SI #45. This institution has a good reputation within the community. Satisfaction = 5.34

PSOL #01. This institution has a good reputation. Satisfaction = 5.70

Difference of .36 which is very significant.

SSI #50. Tutoring services are readily available. Satisfaction = 5.32

PSOL #24. Tutoring services are readily available for online courses. Satisfaction = 5.38

Difference of .06 which is inconsequential.

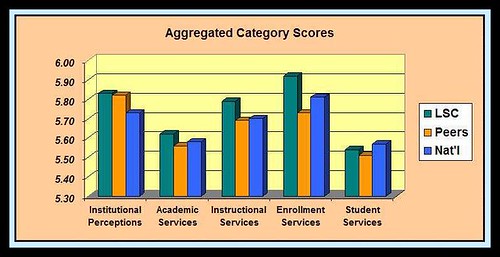

The last chart shows the “Gap Analysis” whihc is a Noel-Levitz recommended measure to focus on those items where there is a large difference between importance and satisfaction. The large the column in the chart below, the greater the opportunity for improvement.

The largest gaps reported by on-ground students are in “Timely Feedback from Faculty” and “Excellent Quality of Instruction.” In both of those case, online students reported a significantly smaller gap between their expectations and reality. The largest gap for online students was with bookstore service, even though the online students were more satisfied with the bookstore than on-ground students (but they placed a higher level of importance on bookstore service.) Also, it can be argues that the questions are not perfectly comparable with F2F survey asking about “helpfulness” of employees and online survey asking about timely service. Also, the gap for online students decreased in the next year after we started an online campus bookstore. More on that in a future post.

The third and final chart shows the four items that were significant at the .05 level. This data set of primarily online students had nine total differences that were significant, and all nine were positive differences for LSC.

The third and final chart shows the four items that were significant at the .05 level. This data set of primarily online students had nine total differences that were significant, and all nine were positive differences for LSC.

In July 2006 I attended the annual

In July 2006 I attended the annual