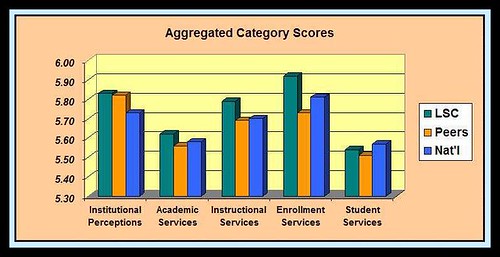

For the PSOL, Noel-Levitz creates five categories from the 26 core items that have both a satisfaction and importance score. Those five categories are:

- Academic services (7)

- Enrollment services (4)

- Institutional perceptions (2)

- Instructional services (8)

- Student services (5)

In each of these categories, there are two or more questions (indicated in parentheses above) that are combined to create the aggregate score.

This column chart shows the LSC scores as compared to both the peer group of 13 similar two-year schools as well as the national results from more than 60 schools. Our best performance is in the Enrollment Services category and our largest opportunity for improvement is in the Student Services category.

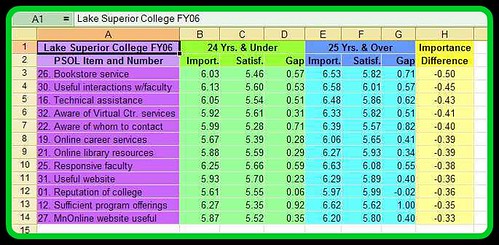

To really make any improvements in these rankings you must look at the underlying specific survey items and attempt to improve services or otherwise improve the level of satisfaction expressed by the students. These are the five questions that comprise the Student Services category score:

- 10. This institution responds quickly when I request information. (Our score here is good!)

- 15. Channels are available for providing timely responses to student complaints.

- 19. Online career services are available.

- 22. I am aware of whom to contact for questions about programs and services.

- 26. The bookstore provides timely service to students. (NOT considered to be Student Services on our campus.)

The third and final chart shows the four items that were significant at the .05 level. This data set of primarily online students had nine total differences that were significant, and all nine were positive differences for LSC.

The third and final chart shows the four items that were significant at the .05 level. This data set of primarily online students had nine total differences that were significant, and all nine were positive differences for LSC.