You can count me among the skeptics of much of what is written about Generation Y, (or Millennials, or as I prefer to call them, the Digital Net-Gennials). I decided to take a look at our most recent PSOL data to see if we can see any evidence of any of the claims made.

One of the traits that is claimed by the “experts” about the Digital Net-Gens is that they are team-oriented. This is often promoted as meaning that they prefer to play in groups and work in groups. There is one statement on the PSOL that appears to get at this type of information.

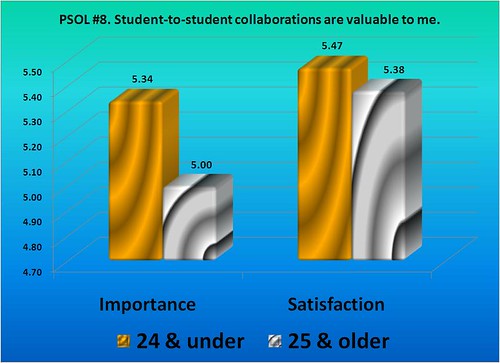

#8: Student-to-student collaborations are valuable to me.

Of the 36 items on the PSOL that are ranked for both importance and satisfaction, this one always comes out as #36, at least in importance. It also has the distinction of usually getting a higher score for satisfaction than for importance. That is very odd for survey questions of this type. Roughly translated (IMO), they are saying some like this: “I really don’t care very much about this item, but you’re doing a pretty good job with it.”

The chart above shows something that I would not have predicted. The students 24 years old and younger (roughly making up the Digital Net Gens) did in fact score the importance of this item as significantly higher than did those students who are 25 & over. NOTE: 296 students in the PSOL survey indicated their age as 24 and under while 283 students indicated age ranges of 25 and over, so the total population was almost evenly split.

The difference of .34 in importance (5.34 for the younger and 5.00 for the older) is clearly a significant difference in importance. So, even though the importance of group work (student collaborations) rates low overall for our online students, the Digital Net Gens do seem to value group work more than the older students. Go figure. I guess every once in a while some of this junk has to work out this way.

Or does it? If I make my own counterpoint to the previous point, there could easily be other factors at play here that are related to age differences, but not necessarily generational trait differences, if you follow my drift. In other words, although the younger students expressed a different level of importance for this item, it might not be because they have different generational traits (Digital Net Gens versus the Gen X-ers and Boomers), but just because older people have different life constraints.

Here’s what I’m getting at. Some other demographic differences between these groups besides the age difference include:

- Primarily on-line student or primarily on-ground student?

- 24 & under: 49% primarily on-line enrollments

- 25 & older: 69% primarily on-line enrollments

- Full-time work status, or part-time, or not employed?

- 24 & under: 27% work full-time

- 25 & older: 52% work full-time

- Residence: Own home, renter, live with relatives, etc.?

- 24 & under: 12% own home

- 25 & older: 61% own home

- Marital status: Married, single, or other?

- 24 & under: 9% married

- 25 & older: 54% married

- Parental status?

- 24 & under: 13% have kids

- 25 & older: 60% have kids

So, let’s add that up. Twice as many of the older group work full-time, five times as many own a home (with all the responsibilities and time suckers that come with that), six times as many are married (with all the time suckers that are implied therein), and over four times as many have kids (you know the drill).

What a surprise that the older group isn’t as crazy about group work (defined currently as student-to-student collaborations) as the younger group. When exactly, do they have the time to fit this into their schedules? And how exactly does that make the generations different from one another?